简介

自从添加了告警之后发现qnap一直在说raid有问题,告警信息如下

RAID array 'md13' on 10.10.100.244 is in degraded state due to one or more disks failures. Number of spare drives is insufficient to fix issue automatically

也就是说raid降级了

操作

首先看下qnap下面有几个raid

[/] # ls /dev/md*

/dev/md1 /dev/md13 /dev/md2 /dev/md256 /dev/md321 /dev/md322 /dev/md9

然后看了告警的两个raid状态,都是下面这个吊样

mdadm -D /dev/md13

/dev/md13:

Version : 1.0

Creation Time : Wed Jun 10 19:03:54 2020

Raid Level : raid1

Array Size : 458880 (448.20 MiB 469.89 MB)

Used Dev Size : 458880 (448.20 MiB 469.89 MB)

Raid Devices : 65

Total Devices : 5

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed Jul 28 09:09:32 2021

State : clean, degraded

Active Devices : 5

Working Devices : 5

Failed Devices : 0

Spare Devices : 0

Name : 13

UUID : 1ae7f759:1ee7bdcd:388738a6:0f8e85fd

Events : 109478

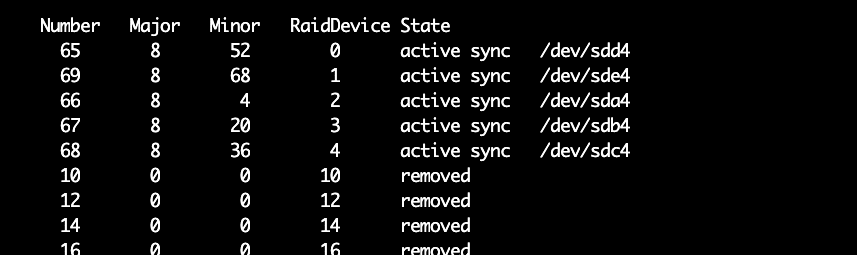

Number Major Minor RaidDevice State

65 8 52 0 active sync /dev/sdd4

69 8 68 1 active sync /dev/sde4

66 8 4 2 active sync /dev/sda4

67 8 20 3 active sync /dev/sdb4

68 8 36 4 active sync /dev/sdc4

10 0 0 10 removed

12 0 0 12 removed

14 0 0 14 removed

16 0 0 16 removed

18 0 0 18 removed

20 0 0 20 removed

22 0 0 22 removed

24 0 0 24 removed

26 0 0 26 removed

28 0 0 28 removed

30 0 0 30 removed

32 0 0 32 removed

磁盘个数其实有65个,太多了我就不全部展示了

然后看下mdstat

cat /proc/mdstat

md13 : active raid1 sdd4[65] sdc4[68] sdb4[67] sda4[66] sde4[69]

458880 blocks super 1.0 [65/5] [UUUUU____________________________________________________________]

bitmap: 1/1 pages [64KB], 65536KB chunk

没错emmm,这个时候只要把磁盘个数修改回来就好了

[/] # mdadm --grow /dev/md13 -n 5

raid_disks for /dev/md13 set to 5

之后就正常了

也不知道qnap是怎么搞的设置了一个65磁盘的raid

欢迎关注我的博客www.bboy.app

Have Fun